Mobile Robotics Navigation Project: About the Software

Mobile Robotics Navigation – the 914 PC-BOT Software

This page is about the software I will be using for my project, check out the project index for the rest.

My main focus for this project is to allow the robot to navigate autonomously through its surroundings. This could be done through ‘dead reckoning‘, using the wheel positions to calculate how far the robot has travelled, and how much it has rotated, to work out its actual current position. This by itself is not always accurate due to wheel slip, and also won’t help the robot in the event of unexpected obstacles. The robot is however driven by stepper motors, so we can count the steps to get this data.

Sensors must also be used to calculate the robot’s distance to obstacles, walls and its general surroundings, and if we collate this data with the wheel odometry, then we can build up a system of Simultaneous localisation and mapping (SLAM). I will be using a Microsoft Kinect sensor to judge distance, rotating the robot to get 360′ of vision, which should enable a fairly accurate map of the surroundings to be built up, or at least for the robot to easily follow an predefined floor plan.

The 914 PC-BOT has API support, and the API is also open source. This allows the M3 controller to be programmed from within a variety of languages.

The Microsoft Kinect also has an SDK provided by Microsoft. However, 3D vision programming is probably above my abilities, so I will be usingRoborealm, which is a low cost but comprehensive piece of software for machine vision.

The Microsoft Kinect also has an SDK provided by Microsoft. However, 3D vision programming is probably above my abilities, so I will be usingRoborealm, which is a low cost but comprehensive piece of software for machine vision.

Roborealm support Kinect directly, as well as many vision processing modules and support for other hardware. The 914 M3 board is not directly supported by Roborealm, but Roborealm does provide its own API support so third party software can interface with the vision data it produces.

Here’s an initial test with Kinect and RoboRealm. The Kinect is on top of the PC-BOT, you can see my laptop running RoboRealm in the bottom right – there is an RGB preview from the Kinect on the right and the depth image on the left, where lighter objects are further away. I’ve placed two boxes in the hallway in order to calibrate the upper and lower threshold and gamma settings within RoboRealm:

Here’s an initial test with Kinect and RoboRealm. The Kinect is on top of the PC-BOT, you can see my laptop running RoboRealm in the bottom right – there is an RGB preview from the Kinect on the right and the depth image on the left, where lighter objects are further away. I’ve placed two boxes in the hallway in order to calibrate the upper and lower threshold and gamma settings within RoboRealm:

I’m not the best software developer in the world, in fact I’m probably one of the worst, so my intention is to make things easy on myself. However, I’d still like to learn something and achieve something, so I’d like to actually write the mapping and navigation software using data provided by Roborealm. My preferred language is Python, although I’ve dabbled with C#.NET also.

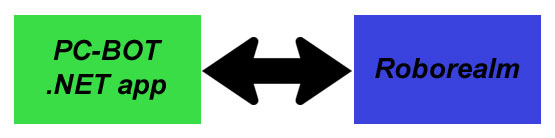

My initial approach is to write a .NET application using the 914 API, which talks to Roborealm via sockets etc:

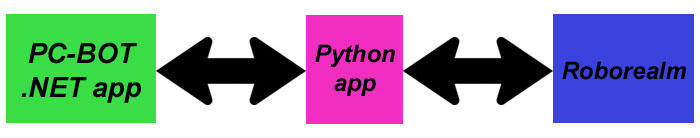

My next approach would be to write a sockets server using the 914 API for the core control of the PC-BOT, but then put the main software in between this and Roborealm, written in which ever language. This would also allow the middle module to run off board the robot on another network connected computer.

I will update this page with some more stuff at some point…

Related Posts

About The Author

James Bruton

My name is James Bruton, I live in Southampton, UK. Please note that this is my personal project website, I have no products for sale, most of the information is provided so that you can have a go yourself... Read More